This is a draft

Abstract

1) Introduction

The purpose is to understand where Grasslands are, or where Grasslands have historically been, so that we may better manage the land with respect to this classification. The following methods are to identify USDA Natural Resource Conservation Service Ecological Sites that are comprised with the Historical Climax Community that can be classified as 'Grassland'.

1a.) Background

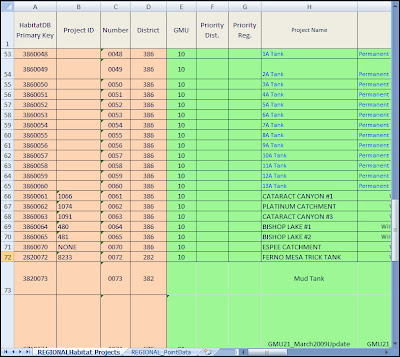

The Natural Resources Conservation Service maintains a repository for data associated with the collection of forestland and rangeland plot data--that supports the development of Ecological Site Descriptions. Currently, Ecological Site Information System website automatically generates these Ecological Site Descriptions (ESD) from the ESIS Database; however, the ESDs are only available for those Ecological Sites that are Approved, there are 390 approved ESDs for the state of Arizona. There is an alternative set of data that is provided by the NRCS, the Adhoc Summary of an Ecological Site; the data provided in these summaries is a list of 133 Ecological Sites for the state of Arizona. Previous efforts to classify Ecological Sites as Grasslands and Savannahs, high and low producing, relied on the ESD Annual Production by Plant Type (See Table Below).

1) Get the polygon Map Unit Key: Using the Map Unit Key from the Shapefile of Soil Survey Areas from Arizona.

2) Get the Ecological Site Key for each Polygon: Retrieving the 'cokey' from the SSURGO database (componenet Table), by joining from the Shapefile Table. Retrieving the 'coecoclassKey' from the SSURGO database (coecoclass Table), by joining the component Table. The Ecological Sites data is joined to the SSURGO database by an ID (like R030XC313AZ).

3) Build Table for Analysis: Sum the production of each plant in the Ecological Site by group. Each plant in the SSURGO database has a key (like ACHY or ABCO) that relates to the PLANTS database. The PLANTS database classifies the plants into groups; so, I imported the PLANTS table that gives me those categories and joined it to the SSURGO database. I downloaded the The Plants database, using the advanced query.

2) Materials and Methods

2a) Material List

I used the column 'Growth Habit' to classify the plants in the SSURGO database--using the PLANTS ID. I Imagine I will need a 'Like' statement in my SQL to get the 4 classes I desire--though Graminoid is the grass and that is straight forward (meaning its not mixed like Forb/herb or Forb/herb, Shrub; see below all the possible classes of plants--by the Growth Habit Column).

The available Ecological Site data that can be downloaded over the ESIS website

2b) Methods List

1) Download the data. I downloaded the whole state of Arizona Soil Survey data from the Web Soil Survey Website. (You can also download parts at the Soil Data Mart. after waiting a couple of hours for my data request to be processed, I was sent an email with the download link

2) The downloads for these Soil Survey datasets include:

i) Shapefile for the Soil Survey (Area the IDs for these look like 'soil_az627').

ii) Shapefile of other geographic features (cool!).

iii) Tabular Data.

iv) XML Metadata and README files.

v) Maybe an Microsoft Access Database Template, depending on how you've downloaded the SA.

3) If you don't already have a Template Database, download one at the Soil Data Mart. I just tried to download the most current for my state; beware there is a National Park Service NPS template also. So, if you look at the data files you downloaded, you will see a folder that is called 'Tabular'. The 70 or so text files found in here are used to build your own database (but you can open it in Excel, like a .csv but using pipes, '|', instead, some are individual tables of the Survey Area

4) Open the Template Access Database, enable Macros to run, using the Macro Browser on the Left--find the Import Macro. Run the Import Macro on each Survey Area Folders Tabular SubFolder.

5) Download the Ecological Site Information System data set. I browsed to the rangeland site, and performed an 'Adhoc Summary'; this provided me with text file of the ecological sites data set. This is (as I understand it) only the 'Approved Ecological Sites' and there are reports generated for each ecological site that has more data than what is given in this download--so for reference I've made a hyperlink list of all ecological sites and their Ids. If you've made it this far, well, your doing damn good--I did the whole state and I didn't know what I was doing so I got pretty involved in all the documentation--but check out what we get to do next!

The data is downloaded, now we need to create our methodology for classifying grasslands. Overview: I have the shapefiles for all the Survey Areas merged into one. I generalized the layer so all the polygon records are merged together--based on the mukey, the Map Unit Key. I have the State of Arizona Access databases (I have 2 because one grew larger than 2 GB); now I've put both Access databases up into a SQL Server database, so I could combine them into one database (very nice :P). I've taken the geodatabase ,from my merged and generalized shapefile, imported it into my database, so I have the list of Map Unit keys from the Map Units of Arizona.

And now we can write the query to answer the question... Where are the grasslands? More specifically, Using the 'mapunit', 'component', 'coecoclass' tables from the SSURGO database and the 'ShapefileTable' I will, using a little SQLish talk here, Select the MUKEY.ShapefileTable and Join these Ids to the mukey.component. With these records, take cokey.component and Join to cokey.coecoclass--now we have the Ecological Site Ids (R030XC313AZ) and names (Limy Upland 10-13" p.z. Deep). To get the data from the downloaded ecological site table continue the join using the Ecological Site IDs.

Results

Discussion

I cannot stress enough how important it is to read and read again all of the documentation that comes with the downloaded data sets, including Metadata, ReadMe files, and all the online literature.

Acknowledgments

Literature Cited

Abstract

1) Introduction

The purpose is to understand where Grasslands are, or where Grasslands have historically been, so that we may better manage the land with respect to this classification. The following methods are to identify USDA Natural Resource Conservation Service Ecological Sites that are comprised with the Historical Climax Community that can be classified as 'Grassland'.

1a.) Background

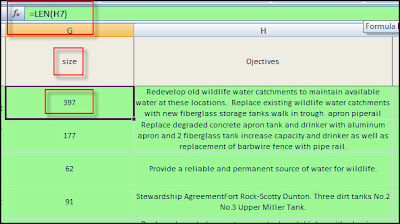

The Natural Resources Conservation Service maintains a repository for data associated with the collection of forestland and rangeland plot data--that supports the development of Ecological Site Descriptions. Currently, Ecological Site Information System website automatically generates these Ecological Site Descriptions (ESD) from the ESIS Database; however, the ESDs are only available for those Ecological Sites that are Approved, there are 390 approved ESDs for the state of Arizona. There is an alternative set of data that is provided by the NRCS, the Adhoc Summary of an Ecological Site; the data provided in these summaries is a list of 133 Ecological Sites for the state of Arizona. Previous efforts to classify Ecological Sites as Grasslands and Savannahs, high and low producing, relied on the ESD Annual Production by Plant Type (See Table Below).

Grasslands were delineated from savannas based on the low annual production value of trees that is stated within each ESD. An Ecological Site was classified as grassland when trees had a low annual production value ≤ 30 lbs/acre. A low annual production value that was > 30 lbs/acre was classified as a savanna.While keeping some of the above methods, the following research will require changes so that all 795 ecological sites in Arizona can be classified. I will be relying on a dataset called SSURGO (NRCS, Soil Survey Geographic Database), ESIS (NRCS, Ecological Site Information System), and PLANTS (NRCS, Plants Database). In summary, the process that I'm developing includes:

Low versus high productivity of the each grassland/ savanna ecological site was determined based on the annual production of grass/grass-like plant type that is stated within each ESD. A grassland/ savanna ecological site was classified as having high annual grass production when the low annual production value was ≥ 400 lbs/acre. A low annual production value that was <400 lbs/acre was classified as having a low annual grass production (Previous research performed by N.A, AZGFD).

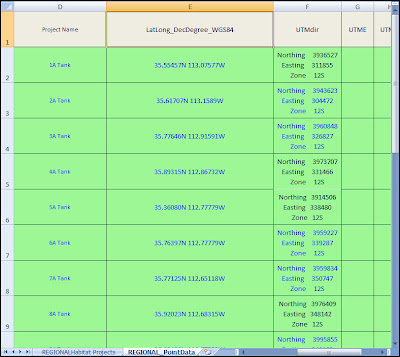

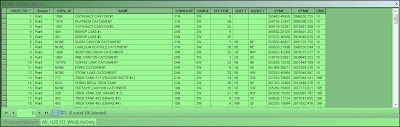

1) Get the polygon Map Unit Key: Using the Map Unit Key from the Shapefile of Soil Survey Areas from Arizona.

2) Get the Ecological Site Key for each Polygon: Retrieving the 'cokey' from the SSURGO database (componenet Table), by joining from the Shapefile Table. Retrieving the 'coecoclassKey' from the SSURGO database (coecoclass Table), by joining the component Table. The Ecological Sites data is joined to the SSURGO database by an ID (like R030XC313AZ).

3) Build Table for Analysis: Sum the production of each plant in the Ecological Site by group. Each plant in the SSURGO database has a key (like ACHY or ABCO) that relates to the PLANTS database. The PLANTS database classifies the plants into groups; so, I imported the PLANTS table that gives me those categories and joined it to the SSURGO database. I downloaded the The Plants database, using the advanced query.

| Ecological Sites that are currently approved. | Ecological Sites from Ad Hoc Data Download | Ecological Sites in Arizona |

| 390 | 133 | 795 |

| SSURGO Tables that join a map unit to component and coecoclass (Ecological Site),from the web, USDA, 2012 |

|

| SQL Query to determine how many ecosites are in Arizona (This query returned 795 records) |

| SELECT distinct(ecoclassid) FROM SSURGO_072012.[dbo].[Shapefile_Soil_Surveys_MapKeys] shapefile /* 3900 rows in shapefile */ INNER JOIN SSURGO_072012.[dbo].[component] component ON component.mukey = shapefile.MUKEY /* 7029 rows in component same after join many map units have many component records.*/ INNER JOIN SSURGO_072012.[dbo].[coecoclass] coecoclass ON coecoclass.cokey = component.cokey order by ecoclassid /* Not every componet has an ecoclass, but nearly all 6027 records after join. */ |

| Ecological Site Description Table |

|

| Other Table summary numbers |

| 44877 Plant Records for all the Ecological Sites, 676 distinct plant symbols, 4926 distinct cokeys, |

| 4912 Distinct cokeys in coeplants SSURGO table |

| 5683 records in coecoclass SSURGO table, 5010 Distinct cokey's, 795 distinct ecoclassid's |

| 7030 rows in component SSURGO table, 6791 distinct cokeys, 3901 distinct mukeys |

2) Materials and Methods

2a) Material List

I used the column 'Growth Habit' to classify the plants in the SSURGO database--using the PLANTS ID. I Imagine I will need a 'Like' statement in my SQL to get the 4 classes I desire--though Graminoid is the grass and that is straight forward (meaning its not mixed like Forb/herb or Forb/herb, Shrub; see below all the possible classes of plants--by the Growth Habit Column).

The Growth Habit Column from the PLANTS Database

- **GROWTH HABIT** Forb/herb Tree, Shrub, Subshrub Vine, Subshrub, Forb/herb Graminoid Shrub, Forb/herb, Subshrub, Vine Subshrub, Shrub, Vine Forb/herb, Shrub Subshrub, Forb/herb Shrub, Subshrub Vine, SubshrubTree, Shrub, Forb/herb Shrub, Tree Vine Tree, Shrub, Vine Tree NULL Subshrub Tree, Subshrub, Shrub Subshrub, Shrub, Graminoid Shrub, Forb/herb, Subshrub Shrub, Vine Forb/herb, Subshrub Forb/herb, Shrub, Subshrub Forb/herb, Shrub, Subshrub, Tree Tree, Shrub Shrub Forb/herb, Shrub, Subshrub, Vine Vine, Forb/herb Subshrub, Shrub Vine, Shrub Tree, Shrub, Subshrub, Forb/herb Shrub, Subshrub, Tree Forb/herb, Vine Subshrub, Shrub, Forb/herb

2b) Methods List

1) Download the data. I downloaded the whole state of Arizona Soil Survey data from the Web Soil Survey Website. (You can also download parts at the Soil Data Mart. after waiting a couple of hours for my data request to be processed, I was sent an email with the download link

2) The downloads for these Soil Survey datasets include:

i) Shapefile for the Soil Survey (Area the IDs for these look like 'soil_az627').

ii) Shapefile of other geographic features (cool!).

iii) Tabular Data.

iv) XML Metadata and README files.

v) Maybe an Microsoft Access Database Template, depending on how you've downloaded the SA.

3) If you don't already have a Template Database, download one at the Soil Data Mart. I just tried to download the most current for my state; beware there is a National Park Service NPS template also. So, if you look at the data files you downloaded, you will see a folder that is called 'Tabular'. The 70 or so text files found in here are used to build your own database (but you can open it in Excel, like a .csv but using pipes, '|', instead, some are individual tables of the Survey Area

4) Open the Template Access Database, enable Macros to run, using the Macro Browser on the Left--find the Import Macro. Run the Import Macro on each Survey Area Folders Tabular SubFolder.

5) Download the Ecological Site Information System data set. I browsed to the rangeland site, and performed an 'Adhoc Summary'; this provided me with text file of the ecological sites data set. This is (as I understand it) only the 'Approved Ecological Sites' and there are reports generated for each ecological site that has more data than what is given in this download--so for reference I've made a hyperlink list of all ecological sites and their Ids. If you've made it this far, well, your doing damn good--I did the whole state and I didn't know what I was doing so I got pretty involved in all the documentation--but check out what we get to do next!

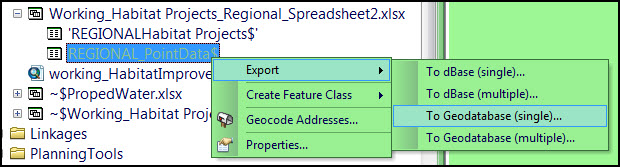

The data is downloaded, now we need to create our methodology for classifying grasslands. Overview: I have the shapefiles for all the Survey Areas merged into one. I generalized the layer so all the polygon records are merged together--based on the mukey, the Map Unit Key. I have the State of Arizona Access databases (I have 2 because one grew larger than 2 GB); now I've put both Access databases up into a SQL Server database, so I could combine them into one database (very nice :P). I've taken the geodatabase ,from my merged and generalized shapefile, imported it into my database, so I have the list of Map Unit keys from the Map Units of Arizona.

And now we can write the query to answer the question... Where are the grasslands? More specifically, Using the 'mapunit', 'component', 'coecoclass' tables from the SSURGO database and the 'ShapefileTable' I will, using a little SQLish talk here, Select the MUKEY.ShapefileTable and Join these Ids to the mukey.component. With these records, take cokey.component and Join to cokey.coecoclass--now we have the Ecological Site Ids (R030XC313AZ) and names (Limy Upland 10-13" p.z. Deep). To get the data from the downloaded ecological site table continue the join using the Ecological Site IDs.

Info | Query |

Intermediate join on component table for all the map units | SELECT * FROM SSURGO_072012.[dbo].[Shapefile_Soil_Surveys_MapKeys] shapefile |

Intermediate join on component table and coecoclass for all the cokeys | SELECT * FROM SSURGO_072012.[dbo].[Shapefile_Soil_Surveys_MapKeys] shapefile |

Built the EcoSitesAndPlants Intermediate Table. Each record, roughly speaking, has the ecosite ,plant group, production amount. 36879 records. | select ecosites.cokey, ecoclassid, ecoclassname, [Scientific Name], [Common Name], Category, Duration, [Growth Habit], ecoclasstypename, forestunprod, rangeprod |

Results

Discussion

I cannot stress enough how important it is to read and read again all of the documentation that comes with the downloaded data sets, including Metadata, ReadMe files, and all the online literature.

Acknowledgments

Literature Cited